Virtualization technology brings security and operability to web applications

Fujitsu Laboratories Ltd. today announced that it has developed technology for web applications that run on smart devices or wearables, and that delivers the same level of security as thin clients while offering an exceptional degree of operability. In recent years, there have been increasing expectations that the use of smart devices and wearables in a variety of front-line scenarios will lead to greater efficiency in business operations. When a high degree of confidentiality is required for the data used by these devices, such as patient data or confidential company data, thin client environments, which leave no trace of the data on the devices, are ideal from a security perspective. Generally, thin clients are environments in which screen data is frequently sent and received. As a result, depending on the status of the mobile network or the processing performance on the device side, lags of up to about a second can occur, and operations that are unique to smart devices, such as swiping are effected.

Fujitsu Laboratories has now developed new virtualization technology for web applications, developed for smart devices, that automatically separates the user interface processing (UI processing) from the data processing. With this technology, data processing is executed in the cloud, and the UI processing is executed on the smart device side. As a result, new web applications running on smart devices or wearables can have a work application execution environment that is as secure as a thin client environment while achieving outstanding operability.

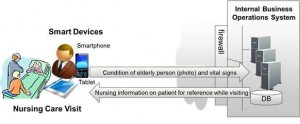

In recent years, the trend of using smart devices for work in a variety of settings is becoming more common. Moreover, as smart glasses and other wearables come into practical use, there are high expectations that linking wearables with smart devices will lead to greater efficiencies in business operations for people in the field (figure 1).

Technological Issues

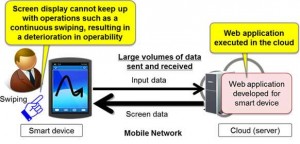

Web applications developed for smart devices, such as cameras and sounds, for example, may use data that have been stored on the devices themselves. In addition, once data received from the cloud are stored on the devices, they may execute business logic. When a high degree of confidentiality is desired for the data used by these devices, such as in the case of patient data or confidential company data, thin client environments, which leave no trace of the data on the devices, are ideal from a security perspective. The problem with thin client environments, however, is that, depending on the status of the mobile network or the processing performance on the device side, lags of up to about a second can occur, and affect smart device operations, such as swiping (figure 2).

The Newly Developed Technology

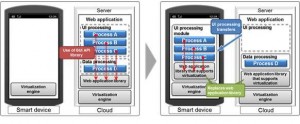

Fujitsu Laboratories has now developed a technology that places the source code of the developed web applications on a server. When web applications are executed on a smart device, they are automatically interpreted. This technology enables processing to be distributed with data processing handled by the server, and UI processing handled by the smart device (figures 3 and 4). The features of this technology are described below.

1. Distributed web applications

A newly developed virtualization engine, run on both the device and the server, performs tasks including the transfer of UI processing and execution of processing content. In addition, a conventional web application library is replaced with a proprietarily developed web application library that supports virtualization. When the engine executes a web application, the source code is analyzed, and, by estimating the source code’s UI processing, it separates that part of the source code written in an API related to the UI defined in the library (web application library), and that is required in web application execution. Having been notified by the device executing the web application, the server sends the UI processing part of the source code and the specific web application library that supports virtualization to the smart device. By executing data processing of everything in the source code except the separated UI processing on the server side, and by executing in a distributed way on the smart device the transferred UI processing, this technology is able to maintain security while achieving a high level of operability. Because these are dynamically processed when a web application is executed, there is no need for redesign or redevelopment work for the distributed processing.

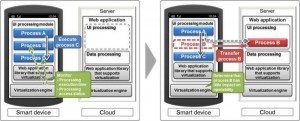

2. Distributed processing in accordance with operations

Fujitsu Laboratories also developed a feature that analyzes on the smart device the user’s operations, processing times, and frequency of operations, and dynamically transfers to the server the processes within the UI processing that have little impact on operability. The result is a secure system that also maintains a high level of operability.

The use of this newly developed virtualization technology enables smart devices to be utilized in business operations when using web applications in a mobile environment. This can be achieved with both security and the high level of operability characteristic of smart devices. In addition, by applying the technology to web applications that communicate with smart glasses and other wearables that are increasingly coming into practical use, thin client environments can be newly expanded to web applications that run on smart devices and wearables, such as for use in work that deals with large amounts of data for which a high level of confidentiality is needed.

Fujitsu Laboratories will work to improve the virtualization technology’s multiplex execution performance on servers and make its operations analysis highly accurate with the goal of practical implementation in fiscal 2016. In addition, rather than just applications for servers or storage equipment, Fujitsu Laboratories will proceed with developing technologies for distributed execution tailored to devices, network equipment, and servers in accordance with execution conditions or the network environment in order to create hyperconnected clouds, in which a variety of clouds are linked together, such as for an Internet of Things environment.

References:http://phys.org/