Month: July 2015

New energy cell can store up solar energy for release at night

Researchers have, for the first time, found a way to store electrons generated by photoelectrochemical (PEC) cells for long periods of time

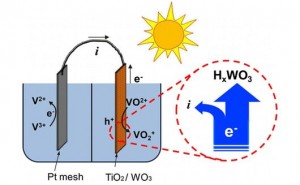

A photoelectrochemical cell (PEC) is a special type of solar cell that gathers the Sun’s energy and transforms it into either electricity or chemical energy used to split water and produce hydrogen for use in fuel cells. In an advance that could help this clean energy source play a stronger role within the smart grid, researchers at the University of Texas, Arlington have found a way to store the electricity generated by a PEC cell for extended periods of time and allow electricity to be delivered around the clock.

Currently, the electricity generated by a PEC cell could not be stored effectively, as the electrons would quickly “disappear” into a lower-energy state. This meant that these cells were not a viable solution for a clean-energy grid, as the electricity had to be used very shortly after being produced. That is, on sunny days, at a time when standard PV panels would already be producing energy at full tilt.

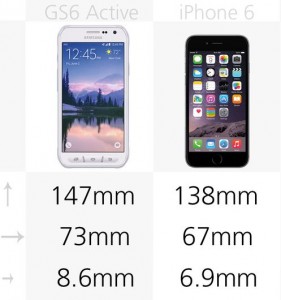

Now, researchers Fuqiang Liu and colleagues have created a PEC cell that includes a specially designed photoelectrode (the component that converts incoming photons into electrons). Unlike previous designs, their hybrid tungsten trioxide/titanium dioxide (WO3/TiO2) photoelectrode can store electrons effectively for long periods of time, paving the way for PEC cells to play a bigger role within a smart energy grid.

The system also includes a vanadium redox-flow battery (VRB). This is an already established type of energy storage cell that is very well-suited for the needs of the electrical grid as it can stay idle for very long times without losing charge, is much safer than a lithium-ion cell (though less energy-dense), is nearly immune to temperature extremes, and can be scaled up very easily, simply by increasing the size of its electrolyte tanks.

According to the researchers, the vanadium flow battery works especially well with their hybrid electrode, allowing them to boost the electric current, offering great reversibility (with 95 percent Faradaic efficiency) and allowing for high-capacity energy storage.

“We have demonstrated simultaneously reversible storage of both solar energy and electrons in the cell,” says lead author of the paper Dong Liu. “Release of the stored electrons under dark conditions continues solar energy storage, thus allowing for continuous storage around the clock.”

The team is now working on building a larger prototype, with the hope that this technology could be used to better integrate photoelectrochemical cells within the smart grid.

References:http://www.gizmag.com/

Is big data still big news?

People talk about ‘data being the new oil’, a natural resource that companies need to exploit and refine. But is this really true or are we in the realm of hype? Mohamed Zaki explains that, while many companies are already benefiting from big data, it also presents some tough challenges.

Government agencies have announced major plans to accelerate big data research and, in 2013, according to a Gartner survey, 64% of companies said they were investing – or intending to invest – in big data technology. But Gartner also pointed out that while companies seem to be persuaded of the merits of big data, many are struggling to get value from it. The problem may be that they tend to focus on the technological aspects of data collection rather than thinking about how it can create value.

But big data is already creating value for some very large companies and some very small ones. Established companies in a number of sectors are using big data to improve their current business practices and services and, at the other end of the spectrum, start-ups are using it to create a whole raft of innovative products and business models.

At the Cambridge Service Alliance, in the Department’s Institute for Manufacturing, we work with a number of leading companies from a range of sectors and see first-hand both the opportunities and challenges associated with big data.

Take a company which makes, sells and leases its products and also provides maintenance and repair services for them. Its products contain sensors that collect vast amounts of data, allowing the company to monitor them remotely and diagnose any problems.

If this data is combined with existing operational data, advanced engineering analytics and forward-looking business intelligence, the company can offer a ‘condition-based monitoring service’, able to analyse and predict equipment faults. For the customer, unexpected downtime becomes a thing of the past, repair costs are reduced and the intervals between services increased. Intelligent analytics can even show them how to use the equipment at optimum efficiency. Original equipment manufacturers (OEMs) and dealers see this as a way of growing their parts and repairs business and increasing the sales of spare parts. It also strengthens relationships with existing customers and attracts new ones looking for a service maintenance contract.

In a completely different sector, an education revolution is under way. Big data is underpinning a new way of learning otherwise known as ‘competency-based education’, which is currently being developed in the USA. A group of universities and colleges is using data to personalise the delivery of their courses so that each student progresses at a pace that suits them, whenever and wherever they like.

In the old model, thousands of students arrive on campus at the start of the academic year and, regardless of their individual levels of attainment, work their way through their course until the point of graduation. In the new data-driven model, universities will be able to monitor and measure a student’s performance, see how long it takes them to complete particular assignments and with what degree of success. Their curriculum is tailored to take account of their preferences, their achievements and any difficulties they may have. For the students, this means a much more flexible way of working which suits their needs and gives them the opportunity to graduate more quickly. For the institutions, it means delivering better quality education and hence achieving better student outcomes, and being able to deploy their staff more efficiently and more in line with their skills and interests.

To get value out of big data, however, organisations need to be able to capture, store, analyse, visualise and interpret it. None of which is straightforward.

One of the main barriers seems to be the lack of a ‘data culture’, where data is wholly embedded in organisational thinking and practices. But companies also face a very long list of challenges to do with data management and processing.

Condition-monitoring services, for example, rely on data transmission, often using satellite systems or digital telephone systems: sometimes there simply is no coverage. Most organisations have vast amounts of data stored in different systems in a variety of formats: bringing these together in one place is difficult.

The whole issue of data ownership is problematic in a service contract environment, where the customer considers it to be their data, generated by their usage, while the service provider may consider it to be theirs as it is processed by their system.

In complex data landscapes, security – managing access to the data and creating robust audit trails – can also be a major challenge as, sometimes, is complying with the legislation around data protection. Many organisations also suffer from a lack of techniques such as data and text-mining models, which include statistical modelling, forecasting, predictive modelling and agent-based models (or optimisation simulations).

Where established organisations may find it hard to move away from their entrenched ways of doing things, start-ups have the luxury of being able to invent new business models at will. At the Cambridge Service Alliance we have also been looking at these new ways of doing things in order to understand what business models that rely on data really look like. The results should help companies of all sizes – not just start-ups – understand how big data may be able to transform their businesses. We have identified six distinct types of business model:

Free data collector and aggregator: companies such as Gnip collect data from vast numbers of different, mostly free, sources then filter it, enrich it and supply it to customers in the format they want.

Analytics-as-a-service: these are companies providing analytics, usually on data provided by their customers. Sendify, for example, provides businesses with real-time caller intelligence, so when a call comes in they see a whole lot of additional information relating to the caller, which helps them maximise the sales opportunity.

Data generation and analysis: these could be companies that generate their own data through crowdsourcing, or through smartphones or other sensors. They may also provide analytics. Examples include GoSquared, Mixpanel and Spinnakr, which collect data by using a tracking code on their customers’ websites, analyse the data and provide reports on it using a web interface.

Free data knowledge discovery: the model here is to take freely available data and analyse it. Gild, for example, helps companies recruit developers by automatically evaluating the code they publish and scoring it.

Data-aggregation-as-a-service: these companies aggregate data from multiple internal sources for their customers, then present it back to them through a range of user-friendly, often highly visual interfaces. In the education sector, Always Prepped helps teachers monitor their students’ performance by aggregating data from multiple education programmes and websites.

Multi-source data mash-up and analysis: these companies aggregate data provided by their customers with other external, mostly free data sources, and perform analytics on this data to enrich or benchmark customer data. Welovroi, is a web-based digital marketing, monitoring and analysing tool that enables companies to track a large number of different metrics. It also integrates external data and allows benchmarking of the success of marketing campaigns.

So what does this tell us? That agile and innovative start-ups are creating entirely new business models based on big data and being hugely successful at it. These models can also inspire larger companies (SMEs as much as multinationals) to think about new ways in which they can capture value from their data.

But these more established companies face significant barriers to doing so and may have to deconstruct their current business models if they are to succeed. In the world of fleet and engines this could be by moving to a condition-based monitoring service or, in the education sector, delivering teaching in a completely new way. If companies can’t innovate when the opportunity arises, they may lose competitive advantage and be left struggling to ‘catch up’ with their competitors.

References:http://phys.org/

New Brain-Like Computer May Solve World’s Most Complex Math Problems

A new computer prototype called a “memcomputer” works by mimicking the human brain, and could one day perform notoriously complex tasks like breaking codes, scientists say.

These new, brain-inspired computing devices also could help neuroscientists better understand the workings of the human brain, researchers say.

In a conventional microchip, the processor, which executes computations, and the memory, which stores data, are separate components. This constant relaying of data between the processor and the memory consumes time and energy, thus limiting the performance of standard computers.

In contrast, Massimiliano Di Ventra, a theoretical physicist at the University of California, San Diego, and his colleagues are building “memcomputers,” made up of “memprocessors,” that both process and store data. This setup mimics the neurons that make up the human brain, with each neuron serving as both the processor and the memory. The building blocks of memcomputers were first theoretically predicted in the 1970s, but they were manufactured for the first time in 2008.

Now, Di Ventra and his colleagues have built a prototype memcomputer they say can efficiently solve one type of notoriously difficult computational problem. Moreover, they built their memcomputer from standard microelectronics.

“These machines can be built with available technology,” Di Ventra told Live Science.

The scientists investigated a class of problems known as NP-complete. With this type of problem, a person may be able to quickly confirm whether any given solution may or may not work but can’t quickly find the best solution to it.

One example of such a conundrum is the “traveling salesman problem,” in which someone is given a list of cities and is asked to find the shortest possible route from a city that visits every other city exactly once and returns to the starting city. Although someone may be able to quickly find out whether a route gets to all of the cities and does not go to any city more than once, verifying whether this route is the shortest involves trying every single combination — a brute-force strategy that grows vastly more complex as the number of cities increases.

The memprocessors in a memcomputer can work collectively and simultaneously to find every possible solution to such conundrums.

The new memcomputer solves the NP-complete version of what is called the subset sum problem. In this problem, one is given a set of integers — whole numbers such as 1 and negative 1, but not fractions such as 1/2 — and must find if there is a subset of those integers whose sum is zero.

“If we work with a different paradigm of computation, those problems that are notoriously difficult to solve with current computers can be solved more efficiently with memcomputers,” Di Ventra said.

But solving this type of problem is just one advantage these computers have over traditional computers. “In addition, we would like to understand if what we learn from memcomputing could teach us something about the operation of the brain,” Di Ventra said.

Quantum computing

To solve NP-complete problems, scientists are also pursuing a different strategy involving quantum computers, which use components known as qubits to investigate every possible solution to a problem simultaneously. However, quantum computers have limitations — for instance, they usually operate at extremely low temperatures.

In contrast, memcomputers “can be built with standard technology and operate at room temperature,” Di Ventra said. In addition, memcomputers could tackle problems that scientists are exploring with quantum computers, such as code breaking.

However, the new memcomputer does have a major limitation: It is difficult to scale this proof-of-concept version up to a multitude of memprocessors, Di Ventra said. The way the system encodes data makes it vulnerable to random fluctuations that can introduce errors, and a large-scale version would require error-correcting codes that would make this system more complex and potentially too cumbersome to work quickly, he added.

Still, Di Ventra said it should be possible to build memcomputers that encode data in a different way. This would make them less susceptible to such problems, and hence scalable to a very large number of memprocessors.

References:http://www.livescience.com/