Almost universal SERS sensor could change how we sniff out small things

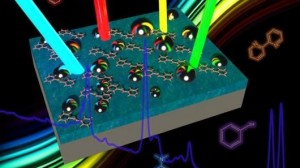

A new almost–universal SERS substrate could be the key to cheaper and easier sensors for drugs, explosives, or anything else (Credit: University of Buffalo)

Identifying fraudulent paintings based on electrochemical data, highlighting cancerous cells in a sea of healthy ones, and identifying different strains of bacteria in samples of food are all examples of surface-enhanced Raman spectroscopy (SERS), a sensor system that has only become more in-demand as our desire for precise, instantaneous information has increased. However, the technology has largely failed commercialization because the chips used are difficult and expensive to create, have limited uses for a particular known substance, and are consumed upon use. Researchers led by a team from the University of Buffalo (UB) aim to change nanoscale sensors with an almost-universal substrate that’s also low-cost, opening up more opportunities for powerful analysis of our environment.

Though SERS is complicated to understand on the surface, it forms a critical part of testing for explosives, identifying toxins in food, and other applications in public health and safety, medicine, and research.

The technique relies on the unique electromagnetic properties of chemical compounds when stimulated with varying wavelengths of laser light and interacting with a surface designed to enhance the response (the “surface” in the name SERS). Each unique compound has a distinct spectral fingerprint and thus an industry researcher can discern between compounds that are invisible to the human eye without having to rely on doping the sample with labeling chemicals or having to possess a large sample.

Yet, currently most surfaces or substrates available on a commercial chip are optimized for only one wavelength of light, meaning scientists working with multiple compounds may need several chips to identify all their samples. This also ignores the ability to identify anonymous samples, which by their nature would require testing on multiple substrates.

The research from the team from UB and Fudan University in China introduces a substrate with a broadband nanostructure that “traps” the wide range of light most often used in SERS analysis, between 450 and 1100 nm.

The surface is composed of a film of thin silver or aluminum acting as a mirror, and a dielectric silica or alumina layer which separates the “mirror” from a layer of randomly applied silver nanoparticles. This construction also avoids expensive lithographic construction techniques.

Researcher Nan Zhang summed up the importance of the design by comparing it to a skeleton key.

“Instead of needing all these different substrates to measure Raman signals excited by different wavelengths, you’ll eventually need just one,” says Zhang. “Just like a skeleton key that opens many doors.”

Perhaps soon these “keys” will be available for airport screening, counterfeit protection, chemical weapon detection and a host of many more purposes requiring flexible, cheap sensors.

“The applications of such a device are far-reaching,” said Kai Liu, a PhD candidate in electrical engineering at UB. “The ability to detect even smaller amounts of chemical and biological molecules could be helpful with biosensors that are used to detect cancer, Malaria, HIV and other illnesses.”

References:http://www.gizmag.com/