Smartphone and tablet could be used for cheap, portable medical biosensing

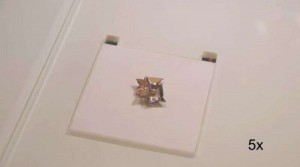

A diagram of the CNBP system (Credit: Centre for Nanoscale BioPhotonics)

As mobile technology progresses, we’re seeing more and more examples of low-cost diagnostic systems being created for use in developing nations and remote locations. One of the latest incorporates little more than a smartphone, tablet, polarizer and box to test body fluid samples for diseases such as arthritis, cystic fibrosis and acute pancreatitis.

Developed at Australia’s Centre for Nanoscale BioPhotonics (CNBP), the setup utilizes fluorescent microscopy, a process in which dyes added to a sample cause specific biomarkers to glow when exposed to bright light.

To use it, clinicians deposit a dyed fluid sample in a well plate (basically a transparent sample-holding tray), put that plate on the screen of a tablet that’s in the box, and place a piece of polarizing glass over the plate compartment that contains the fluid. They then put their smartphone on top of the box, so that its camera lines up with that compartment.

Once the tablet is powered up, the light from its screen causes the targeted biomarkers to fluoresce (assuming they’re present in the first place). The polarizer allows light given off by those biomarkers to stand out from the tablet’s light, while an app on the phone analyzes the color and intensity of the fluorescence to help make a diagnosis.

“This type of fluorescent testing can be carried out by a variety of devices but in most cases the readout requires professional research laboratory equipment, which costs many tens of thousands of dollars,” says Ewa Goldys, CNBP’s deputy director. “What we’ve done is develop a device with a minimal number of commonly available components … The results can be analyzed by simply taking an image and the readout is available immediately.”

The free smartphone app will be available as of June 15th, via the project website. A paper on the research was recently published in the journal Sensors.

References:http://www.gizmag.com/